I have been having trouble with my former low-end VPS provider after two years of quite stable service. They decided to move data centers, and my OpenVZ box ended up being corrupted during the move. I have been looking to move for quite a while now. First of all I started using Arch Linux a while ago, and have been enjoying effortless rolling updates and upgrades every day. My former server was running Ubuntu 10.04 for 2 years, and due to fear of breaking it during updates (yes, it happens more often than one might think) I was stuck with some pretty old libraries, and although I managed to compile PHP and ngnix every six months or so to stay up to date, other newer packages required newer libraries, which in turn required a new kernel, etc.

So I was looking for a VPS provider with Arch images. Amazon AWS is quite expensive, although Arch Linux AMI images are available from Uplink Labs. But besides that, I’ve also been looking to switch to XEN virtualization, to have guaranteed memory, the power of swap and other advantages over OpenVZ and Virtuozzo offered by many companies.

After having tried out several alternatives on the low-end market, it’s been nothing but headaches, for the past month. So I decided to go for a safe, proven and mainstream provider – Linode. Fit my criteria of carrying Arch images (1.8% of deployments are Arch on Linode), XEN virtualization, quite low-end and budget-friendly, 2TB of data transfer, and promised effortless upgrades. The only downside was their lack of support for PayPal payments (very probably justified). So I had to get a prepaid virtual card.

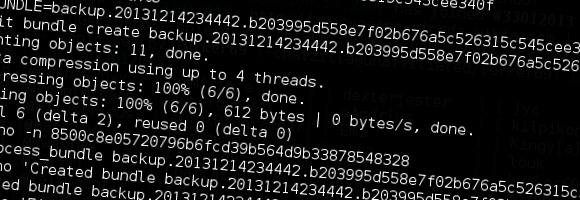

So, as of a couple of days ago, the new home for my dozen of sites, and repositories is a blazingly fast XEN Arch Linux box at Linode. I’m quite sure I wont’ be disappointed.

What have you tried? What do you use now?

Published 12 years ago

by soulseekah

with no comments

tagged hosting, linode, vps, xen in General, Hardware

hosting linode vps xen General Hardware