Surviving An Internet Blackout

On the 12th of February an Anonymous posted the following pastebin: Operation Global Blackout. In case the pastebin disappears here’s the plaintext: Operation Global Blackout Anonymous.

To protest SOPA, Wallstreet, our irresponsible leaders and the beloved bankers who are starving the world for their own selfish needs out of sheer sadistic fun, On March 31, the Internet will go Black.

Disclaimer

This article will neither judge the motivation behind the attack, nor its validity. This article will provide an overview of the underlying technologies, what they mean, how they function at a high level, what happens if an attack is indeed proceeded with, and how to be prepared for one. In short – how to understand and survive an Internet blackout.

The technical details

The pastebin states that the world’s 13 Root Name Servers will be attacked in an attempt to compromise all DNS requests. The Root Name Servers would be busy answering an overpowering amount of requests from remote machines and won’t be able to answer your domain name lookups (issued via your ISP in a long chain).

A visual explanation of how DNS lookups work will make more sense if you don’t have the resources to read the wiki.

By cutting these off the Internet, nobody will be able to perform a domain name lookup.

Distributed denial of service attacks on root nameservers have been attempted in the past, and my bet is that most of us never even knew or noticed, since there are failover mechanisms. There are not 13 root servers, explains how in reality the root servers are in a network of 100+ machines.

While some ISPs uses DNS caching, most are configured to use a low expire time for the cache, thus not being a valid failover solution

in the case the root servers are down. It is mostly used for speed, not redundancy.

Indeed, your ISP will certainly cache DNS lookup requests for speed, and each ISP will have its own individual rules. I called my ISP today in order to find out what’s the TTL of their root zone cache, they refused to answer. But I issued a lookup request, and judging by the response times my ISP caches popular (com. net. org.) root zones for 900 seconds only. Most will obey the TTL of the zone files themselves, as it’s good practice.

Surviving

the hi-tech way

Much preparation is needed in order to be fully equipped to survive such a blackout. The first thing that pops to mind is – change DNS lookup servers. Use Google’s Public DNS, although, they are most-probably playing by the TTL rules regardless; so unless they have a failover backup plan, they won’t be of any help. Even if, Google’s public DNS servers (8.8.8.8 and 8.8.6.6) may be targeted as well. So what can one do?

Setup one’s own DNS server. Being autonomous is best.

Meet bind and meet the Root Zone File. Combine the two and set your DNS server settings to localhost or the network address of that old 386 that’s been collecting dust in your garage waiting for its time to shine again.

Install bind9

Get binaries from here, sudo apt-get install bind9, or any of your other favorite package managers.

Configure bind9

bind will already have a db.root directory, this lists the 13 root nameservers. This won’t help, since they would be down. Download the root.zone file from here among other places. Open up named.conf.default-zones and add a new zone entry:

zone "." {

type master;

file "/path/to/root.zone";

};

Make sure you don’t miss a single semi-colon, every zone record ends with one. Restart bind.

Becoming the root servers

Configure the hosts file on your DNS server to have all root name servers point to localhost instead.

127.0.0.1 a.root-servers.net 127.0.0.1 b.root-servers.net 127.0.0.1 c.root-servers.net 127.0.0.1 d.root-servers.net 127.0.0.1 e.root-servers.net 127.0.0.1 f.root-servers.net 127.0.0.1 g.root-servers.net 127.0.0.1 h.root-servers.net 127.0.0.1 i.root-servers.net 127.0.0.1 j.root-servers.net 127.0.0.1 k.root-servers.net 127.0.0.1 l.root-servers.net 127.0.0.1 m.root-servers.net

Testing

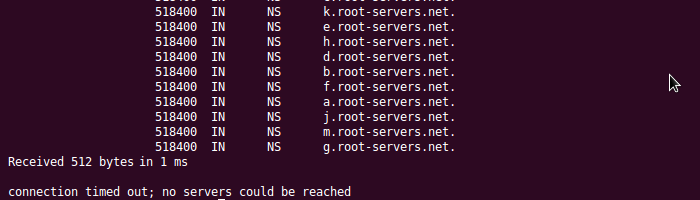

You can use nslookup (available on Windows as well) or, my favorite, dig. Issue a lookup request for any domain with tracing on.

$ dig @localhost google.com +trace ; <<>> DiG 9.7.0-P1 <<>> @localhost google.com +trace ; (2 servers found) ;; global options: +cmd . 518400 IN NS j.root-servers.net. . 518400 IN NS a.root-servers.net. . 518400 IN NS i.root-servers.net. . 518400 IN NS d.root-servers.net. . 518400 IN NS l.root-servers.net. . 518400 IN NS h.root-servers.net. . 518400 IN NS m.root-servers.net. . 518400 IN NS k.root-servers.net. . 518400 IN NS b.root-servers.net. . 518400 IN NS g.root-servers.net. . 518400 IN NS e.root-servers.net. . 518400 IN NS c.root-servers.net. . 518400 IN NS f.root-servers.net. ;; Received 512 bytes from 127.0.0.1#53(127.0.0.1) in 1 ms com. 172800 IN NS e.gtld-servers.net. com. 172800 IN NS l.gtld-servers.net. com. 172800 IN NS i.gtld-servers.net. com. 172800 IN NS h.gtld-servers.net. com. 172800 IN NS c.gtld-servers.net. com. 172800 IN NS f.gtld-servers.net. com. 172800 IN NS m.gtld-servers.net. com. 172800 IN NS k.gtld-servers.net. com. 172800 IN NS g.gtld-servers.net. com. 172800 IN NS d.gtld-servers.net. com. 172800 IN NS b.gtld-servers.net. com. 172800 IN NS j.gtld-servers.net. com. 172800 IN NS a.gtld-servers.net. ;; Received 500 bytes from 127.0.0.1#53(m.root-servers.net) in 1 ms google.com. 172800 IN NS ns2.google.com. google.com. 172800 IN NS ns1.google.com. google.com. 172800 IN NS ns3.google.com. google.com. 172800 IN NS ns4.google.com. ;; Received 164 bytes from 192.43.172.30#53(i.gtld-servers.net) in 94 ms google.com. 300 IN A 173.194.69.138 google.com. 300 IN A 173.194.69.100 google.com. 300 IN A 173.194.69.139 google.com. 300 IN A 173.194.69.101 google.com. 300 IN A 173.194.69.102 google.com. 300 IN A 173.194.69.113 ;; Received 124 bytes from 216.239.34.10#53(ns2.google.com) in 100 ms

So there you go, none of the 13 root servers were even required to lookup google.com. Our zone file contained all the necessary information to complete the full chain. The gTLD servers responded with the necessary entries for Google’s nameservers.

Further considerations

bind is a very powerful tool. There are other ways to setup bind in more intricate ways and suit the purpose. Find out more information about it online, learn it, as it might actually be your life-savior on the Internet.

The root.zone file does not change often, it contains TLDs, and you don’t see them changing nameservers often, so most of it would be valid for months if not years.

Similarly you can compile zone files for specific domains, in case attacks are performed on gTLD name servers, which answer for the com. zone in particular. That way, all your favorite sites would be independent. There are various methods to accomplish this and more. Increased caching, like using the min-cache-ttl directive, might also help gather data for your most visited sites.

The methods described here are to be used in emergencies only as they yield unexpected results. And always remember about security, if you’re going to let your community use your server. DNS vulnerabilities have to be accounted for.

the low-tech way

Go out for a walk or a jog, have a picnic with some friends, ride your bike, read a book, get a life, etc.

Conclusion

DNS is one of the weak links in how the mechanics of the Internet. A decentralized and distributed DNS may potentially be more robust, and outside of the control of the handful of servers it relies on. There are a couple of projects out there, like DOT-P2P.

Are you prepared for an Internet blackout?